30 Dec 2012

Wireless Cable, HDTV, Cable Telephony, Interactive Television

Some of the future enhancements for CATV include wireless cable, cable

telephony, video on demand (VOD), and interactive television.

Wireless Cable

“Wireless Cable” is a term given to land based (terrestrial) wireless distribution systems that utilize microwave frequencies to deliver video, data, and/or voice signals to end-users. There are two basic types of wireless cable systems, multichannel multipoint distribution service (MMDS) and local multichannel distribution service (LMDS).

Multichannel video and data services are being offered over microwave frequencies. The data-over-cable service interface specification (DOCSIS) with a few modifications can also be used in 2.6 GHz wireless multipoint, multichannel distribution service (MMDS), and 28 GHz local multipoint distribution service (LMDS) systems [14]. The DOCSIS specification is being adapted for the wireless cable marketplace. A consortium called “Wireless DSL” is working to produce an adapted version of DOCSIS called DOCSIS+ that is suitable for offering cable modem technology via microwave transmission. The DOCSIS+ standard has been proposed to the IEEE 802.16 for conversion into an official standard.

Figure 1 shows a LMDS system. This diagram shows that the major component of a wireless cable system is the head-end equipment. The head-end equipment is equivalent to a telephone central office. The head-end building has a satellite connection for cable channels and video players for video on demand. The head-end is linked to base stations (BS) which transmits radio frequency signals for reception. An antenna and receiver in the home converts the microwave radio signals into the standard television channels for use in the home. As in traditional cable systems, a set-top box decodes the signal for input to the television. Low frequency wireless cable systems such as MMDS wireless cable systems (approx 2.5 GHz) can reach up to approximately 70 miles. High frequency LMDS systems (approx 28 GHz) can only reach approximately 5 miles.

Figure 1: Local Multipoint Distribution System (LMDS)

High Definition Television (HDTV)

High definition television (HDTV) is a TV broadcast system that proves higher picture resolution (detail and fidelity) than is provided by conventional NTSC and PAL television signals. HDTV signals can be in analog or digital form.

The specifications for HDTV digital systems allow for many types of data services in addition to digital video service. Digital HDTV channels carry high-speed digital services that can be addressed to a specific customer or group of customers that are capable of decoding and using those services. Examples of these services include: special programming information, software delivery, video or audio delivery (like pay-per-view programming), and instructional materials.

The data rate available for additional services is dynamic and ranges from a few kbps to several Mbps, depending on the video and audio program content. The gross data rate of the HDTV system is 19 Mbps. The amount of this data rate that is used by the HDTV video signal depends on the compression technology. Video data compression produces a data rate that changes dependent on the original video signal. When the video program contains rapidly changing scenes, most of the 19 Mbps signal is required for transmission. If the video signal is not changing rapidly, much of the 19 Mbps can be used for other types of services.

Transmission of the additional services has a lower priority than transmission of the primary program. If the primary service (HDTV) consumes a large part of the data (such as a rapidly changing video action scene), the customer may have to wait for some time prior to receiving large blocks of data.

Cable Telephony

Cable telephony is the providing of telephone services that use CATV systems to initiate, process, and receive voice communications. Cable telephony systems can either integrate telephony systems with cable modem networks (a teleservice) or the cable modem system can simply act as a transfer method for Internet telephony (bearer service). Because of government regulations (restrictions or high operational level requirements) in many countries, some cable operators are delaying the integration of telephone services with cable network. In either case, cable telephony systems are data telephony systems that include a voice gateway, gatekeeper, and a media interface.

Voice gateway is a network device that converts communication signals between data networks and telephone networks. A gatekeeper is a server that translates dialed digits into routing points within the cable network or to identify a forwarding number for the public telephone network. A multimedia transfer adapter converts multiple types of input signals into a common communications format.

Figure 2 shows a CATV system that offers cable telephony services. This diagram shows that a two-way digital CATV system can be enhanced to offer cable telephony services by adding voice gateways to the cable network’s head-end CMTS system and media terminal adapters (MTAs) at the residence or business. The voice gateway connects and converts signals from the public telephone network into data signals that can be transported on the cable modem system. The CMTS system uses a portion of the cable modem signal (data channel) to communicate with the MTA. The MTA converts the telephony data signal to its analog audio component for connection to standard telephones. MTAs are sometimes called integrated access devices (IADs).

Because of the high data transmission capability of cable television systems, cable telephony system can provide video telephony service. Video telephony is a telecommunications service that provides customers with both audio and video signals between their communications devices.

Interactive Television

Interactive television is a combination of cable, television, multimedia, PCs, and network programming that allows dynamic control of media display using inputs from the end-user. Interactive television has three basic types: “pay-per-view” involving programs that are independently billed, “near video-on-demand” (NVOD) with groupings of a single film starting at staggered times, and “video-on-demand” (VOD), enabling request for a particular film to start at the exact time of choice. Interactive television offers interactive advertising, home shopping, home banking, e-mail, Internet access, and games.

Video on demand (VOD) is a service that allows customers to request and receive video services. These video services can be from previously stored media (entertainment movies or education videos) or have a live connection (sporting events in real time).

A limited form of VOD is called near video on demand (NVOD). Near video on demand is a video service that allows a customer to select from a limited number of broadcast video channels. These video channels are typically movie channels that have pre-designated schedule times. Unlike full VOD service, the customer is not able to alter the start or play time of these broadcast videos.

Pay per view (PPV) is a process that allows customers to request the viewing of movies through an unscrambling process. PPV movies are usually broadcasted to all customers in a cable television network. To prevent unauthorized viewing, each PPV channel has its own scrambling code. To provide a customer with a reasonable selection of movies, the same movie is broadcasted on different channels with start intervals that range from 15 to 60 minutes. To provider twenty PPV movies, approximately 80 to 160 television channels would be required.

Analog cable systems provide up to 800 MHz of bandwidth. Using 6 MHz wide video channels, this allows up to 120 analog video channels. By digitizing each 6 MHz channel and using compressed digital video (10:1 compression), this increases the capacity of a cable system to over 500 digital television channels.

Cable converter boxes, known as set-top boxes, have different reception and decoding capabilities. Set-top boxes are required to convert distributed signals into a format suitable for viewing. Set-top boxes also can coordinate access to video on demand channels.

Electronic programming guide (EPG) is an interface (portal) that allows a customer to preview and select from possible list of available content media. EPGs can vary from simple program selection to interactive filters that dynamically allow the user to filter through program guides by theme, time period, or other criteria.

Figure 3 shows a video on demand system. This diagram shows that multiple video players are available and these video players can be access by the end customer through the set-top box. When the customer browses through the available selection list, they can select the media to play.

Figure 3: Video on Demand (VOD)

Hypervideo is a video program delivery system that allows the embedding of links (hotspots) inside a streaming video signal. This allows the customer (or receiving device) to dynamically alter the presentation of streaming information. Examples of hypervideo could be pre-selection of preferred advertising types or interactive game shows.

Synchronized television (syncTV) is a video program delivery application that simultaneously transmits hypertext markup language (HTML) data that is synchronized with television programming. Synchronized television allows the simultaneous display of a video program along with additional information or graphics that may be provided by advertisers or other information providers

Wireless Cable

“Wireless Cable” is a term given to land based (terrestrial) wireless distribution systems that utilize microwave frequencies to deliver video, data, and/or voice signals to end-users. There are two basic types of wireless cable systems, multichannel multipoint distribution service (MMDS) and local multichannel distribution service (LMDS).

Multichannel video and data services are being offered over microwave frequencies. The data-over-cable service interface specification (DOCSIS) with a few modifications can also be used in 2.6 GHz wireless multipoint, multichannel distribution service (MMDS), and 28 GHz local multipoint distribution service (LMDS) systems [14]. The DOCSIS specification is being adapted for the wireless cable marketplace. A consortium called “Wireless DSL” is working to produce an adapted version of DOCSIS called DOCSIS+ that is suitable for offering cable modem technology via microwave transmission. The DOCSIS+ standard has been proposed to the IEEE 802.16 for conversion into an official standard.

Figure 1 shows a LMDS system. This diagram shows that the major component of a wireless cable system is the head-end equipment. The head-end equipment is equivalent to a telephone central office. The head-end building has a satellite connection for cable channels and video players for video on demand. The head-end is linked to base stations (BS) which transmits radio frequency signals for reception. An antenna and receiver in the home converts the microwave radio signals into the standard television channels for use in the home. As in traditional cable systems, a set-top box decodes the signal for input to the television. Low frequency wireless cable systems such as MMDS wireless cable systems (approx 2.5 GHz) can reach up to approximately 70 miles. High frequency LMDS systems (approx 28 GHz) can only reach approximately 5 miles.

Figure 1: Local Multipoint Distribution System (LMDS)

High Definition Television (HDTV)

High definition television (HDTV) is a TV broadcast system that proves higher picture resolution (detail and fidelity) than is provided by conventional NTSC and PAL television signals. HDTV signals can be in analog or digital form.

The specifications for HDTV digital systems allow for many types of data services in addition to digital video service. Digital HDTV channels carry high-speed digital services that can be addressed to a specific customer or group of customers that are capable of decoding and using those services. Examples of these services include: special programming information, software delivery, video or audio delivery (like pay-per-view programming), and instructional materials.

The data rate available for additional services is dynamic and ranges from a few kbps to several Mbps, depending on the video and audio program content. The gross data rate of the HDTV system is 19 Mbps. The amount of this data rate that is used by the HDTV video signal depends on the compression technology. Video data compression produces a data rate that changes dependent on the original video signal. When the video program contains rapidly changing scenes, most of the 19 Mbps signal is required for transmission. If the video signal is not changing rapidly, much of the 19 Mbps can be used for other types of services.

Transmission of the additional services has a lower priority than transmission of the primary program. If the primary service (HDTV) consumes a large part of the data (such as a rapidly changing video action scene), the customer may have to wait for some time prior to receiving large blocks of data.

Cable Telephony

Cable telephony is the providing of telephone services that use CATV systems to initiate, process, and receive voice communications. Cable telephony systems can either integrate telephony systems with cable modem networks (a teleservice) or the cable modem system can simply act as a transfer method for Internet telephony (bearer service). Because of government regulations (restrictions or high operational level requirements) in many countries, some cable operators are delaying the integration of telephone services with cable network. In either case, cable telephony systems are data telephony systems that include a voice gateway, gatekeeper, and a media interface.

Voice gateway is a network device that converts communication signals between data networks and telephone networks. A gatekeeper is a server that translates dialed digits into routing points within the cable network or to identify a forwarding number for the public telephone network. A multimedia transfer adapter converts multiple types of input signals into a common communications format.

Figure 2 shows a CATV system that offers cable telephony services. This diagram shows that a two-way digital CATV system can be enhanced to offer cable telephony services by adding voice gateways to the cable network’s head-end CMTS system and media terminal adapters (MTAs) at the residence or business. The voice gateway connects and converts signals from the public telephone network into data signals that can be transported on the cable modem system. The CMTS system uses a portion of the cable modem signal (data channel) to communicate with the MTA. The MTA converts the telephony data signal to its analog audio component for connection to standard telephones. MTAs are sometimes called integrated access devices (IADs).

Figure 2: Cable Telephony

Because of the high data transmission capability of cable television systems, cable telephony system can provide video telephony service. Video telephony is a telecommunications service that provides customers with both audio and video signals between their communications devices.

Interactive Television

Interactive television is a combination of cable, television, multimedia, PCs, and network programming that allows dynamic control of media display using inputs from the end-user. Interactive television has three basic types: “pay-per-view” involving programs that are independently billed, “near video-on-demand” (NVOD) with groupings of a single film starting at staggered times, and “video-on-demand” (VOD), enabling request for a particular film to start at the exact time of choice. Interactive television offers interactive advertising, home shopping, home banking, e-mail, Internet access, and games.

Video on demand (VOD) is a service that allows customers to request and receive video services. These video services can be from previously stored media (entertainment movies or education videos) or have a live connection (sporting events in real time).

A limited form of VOD is called near video on demand (NVOD). Near video on demand is a video service that allows a customer to select from a limited number of broadcast video channels. These video channels are typically movie channels that have pre-designated schedule times. Unlike full VOD service, the customer is not able to alter the start or play time of these broadcast videos.

Pay per view (PPV) is a process that allows customers to request the viewing of movies through an unscrambling process. PPV movies are usually broadcasted to all customers in a cable television network. To prevent unauthorized viewing, each PPV channel has its own scrambling code. To provide a customer with a reasonable selection of movies, the same movie is broadcasted on different channels with start intervals that range from 15 to 60 minutes. To provider twenty PPV movies, approximately 80 to 160 television channels would be required.

Analog cable systems provide up to 800 MHz of bandwidth. Using 6 MHz wide video channels, this allows up to 120 analog video channels. By digitizing each 6 MHz channel and using compressed digital video (10:1 compression), this increases the capacity of a cable system to over 500 digital television channels.

Cable converter boxes, known as set-top boxes, have different reception and decoding capabilities. Set-top boxes are required to convert distributed signals into a format suitable for viewing. Set-top boxes also can coordinate access to video on demand channels.

Electronic programming guide (EPG) is an interface (portal) that allows a customer to preview and select from possible list of available content media. EPGs can vary from simple program selection to interactive filters that dynamically allow the user to filter through program guides by theme, time period, or other criteria.

Figure 3 shows a video on demand system. This diagram shows that multiple video players are available and these video players can be access by the end customer through the set-top box. When the customer browses through the available selection list, they can select the media to play.

Figure 3: Video on Demand (VOD)

Hypervideo is a video program delivery system that allows the embedding of links (hotspots) inside a streaming video signal. This allows the customer (or receiving device) to dynamically alter the presentation of streaming information. Examples of hypervideo could be pre-selection of preferred advertising types or interactive game shows.

Synchronized television (syncTV) is a video program delivery application that simultaneously transmits hypertext markup language (HTML) data that is synchronized with television programming. Synchronized television allows the simultaneous display of a video program along with additional information or graphics that may be provided by advertisers or other information providers

Digital Video

Digital broadcasting is the sending of a digital signal through a common

channel to a group of users that may be capable of decoding some or all of the broadcast

information.

Digital Television (DTV) is a method of transferring video images and their audio components through digital transmission. There are several formats used for DTV including high quality digital MPEG and 28.8 video.

Digital video is the sending of a sequence of picture signals (frames) that are represented by binary data (bits) that describe a finite set of color and luminance levels. Sending a digital video picture involves the conversion of a scanned image to digital information that is transferred to a digital video receiver. The digital information contains characteristics of the video signal and the position of the image (bit location) that will be displayed. Digital television continues to send information in the form of frames and pixels. The major difference is the frames and pixels are represented by digital information instead of a continuously varying analog signal.

The first digital television broadcast license for the United States was issued to a Hawaiian television station in September 1997. Digital television sends the video signal in digital modulated form. Ironically, many television signals have been captured and stored in digital form for over 10 years. To transmit these digital video signals, they must first be converted to standard analog television (NTSC or PAL) to be transmitted through analog transmission systems and to reach analog televisions.

When digital transmission is used, most digital video systems use some form of data compression. Data compression involves the characterization of a single picture into its components. For example, if the picture was a view of the blue sky, this could be characterized by a small number of data bits that indicate the color (blue) and the starting corner and ending corner. This may require under 10 bytes of information. When this digital information is received, it will create a blue box that may contain over 7,200 pixels. With a color picture, this would have required several thousand bytes of information for only 1 picture.

In addition to the data compression used on one picture (one frame), digital compression allows the comparison between frames. This allows the repeating of sections of a previous frame. For example, a single frame may be a picture of city with many buildings. This is a very complex picture and data compression will not be able to be as efficient as the blue sky example above. However, the next frame will be another picture of the city with only a few changes. The data compression can send only the data that has changed between frames.

Digital television broadcasting that uses video compression technology allows for “multicasting” (simultaneously sending) several “standard definition” television channels (normally up to five channels) in the same bandwidth as a standard analog television channel. Unfortunately, high definition digital television channels require a much higher data transmission rate and it is likely that only a single HDTV channel can be sent on a digital television channel.

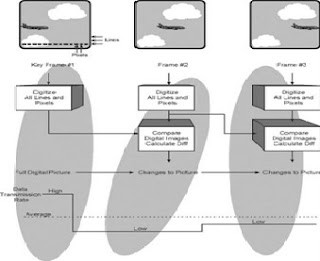

Figure 1 demonstrates the operation of the basic digital video compression system. Each video frame is digitized and then sent for digital compression. The digital compression process creates a sequence frames (images) that start with a key frame. The key frame is digitized and used as reference points for the compression process. Between the key frames, only the differences in images are transmitted. This dramatically reduces the data transmission rate to represent a digital video signal as an uncompressed digital video signal requires over 50 Mbps compared to less than 4 Mbps for a typical digital video disk (DVD) digital video signal.

Digital Television (DTV) is a method of transferring video images and their audio components through digital transmission. There are several formats used for DTV including high quality digital MPEG and 28.8 video.

Digital video is the sending of a sequence of picture signals (frames) that are represented by binary data (bits) that describe a finite set of color and luminance levels. Sending a digital video picture involves the conversion of a scanned image to digital information that is transferred to a digital video receiver. The digital information contains characteristics of the video signal and the position of the image (bit location) that will be displayed. Digital television continues to send information in the form of frames and pixels. The major difference is the frames and pixels are represented by digital information instead of a continuously varying analog signal.

The first digital television broadcast license for the United States was issued to a Hawaiian television station in September 1997. Digital television sends the video signal in digital modulated form. Ironically, many television signals have been captured and stored in digital form for over 10 years. To transmit these digital video signals, they must first be converted to standard analog television (NTSC or PAL) to be transmitted through analog transmission systems and to reach analog televisions.

When digital transmission is used, most digital video systems use some form of data compression. Data compression involves the characterization of a single picture into its components. For example, if the picture was a view of the blue sky, this could be characterized by a small number of data bits that indicate the color (blue) and the starting corner and ending corner. This may require under 10 bytes of information. When this digital information is received, it will create a blue box that may contain over 7,200 pixels. With a color picture, this would have required several thousand bytes of information for only 1 picture.

In addition to the data compression used on one picture (one frame), digital compression allows the comparison between frames. This allows the repeating of sections of a previous frame. For example, a single frame may be a picture of city with many buildings. This is a very complex picture and data compression will not be able to be as efficient as the blue sky example above. However, the next frame will be another picture of the city with only a few changes. The data compression can send only the data that has changed between frames.

Digital television broadcasting that uses video compression technology allows for “multicasting” (simultaneously sending) several “standard definition” television channels (normally up to five channels) in the same bandwidth as a standard analog television channel. Unfortunately, high definition digital television channels require a much higher data transmission rate and it is likely that only a single HDTV channel can be sent on a digital television channel.

Figure 1 demonstrates the operation of the basic digital video compression system. Each video frame is digitized and then sent for digital compression. The digital compression process creates a sequence frames (images) that start with a key frame. The key frame is digitized and used as reference points for the compression process. Between the key frames, only the differences in images are transmitted. This dramatically reduces the data transmission rate to represent a digital video signal as an uncompressed digital video signal requires over 50 Mbps compared to less than 4 Mbps for a typical digital video disk (DVD) digital video signal.

Technologies- Analog Video

Some of the key technologies used in CATV systems include analog video.

Analog Video

Analog video contains a rapidly changing signal (analog) that represents cthe luminance and color information of a video picture. Sending a video picture involves the creation and transfer of a sequence of individual still pictures called frames. Each frame is divided into horizontal and vertical lines. To create a single frame picture on a television set, the frame is drawn line by line. The process of drawing these lines on the screen is called scanning. The frames are drawn to the screen in two separate scans. The first scan draws half of the picture and the second scan draws between the lines of the first scan. This scanning method is called interlacing. Each line is divided into pixels that are the smallest possible parts of the picture. The number of pixels that can be displayed determines the resolution (quality) of the video signal. The video signal breaks down the television picture into three parts: the picture brightness (luminance), the color (chrominance), and the audio.

There are three primary systems used for analog television broadcasting: NTSC, PAL, and SECAM. The National Television System Committee (NTSC) is used for the Americas, while PAL and SECAM are primarily used in the UK and other countries. The major difference between the analog television systems is the number of lines of resolution and the methods used for color transmission.

There have been enhancements made to analog video systems over the past 50 years. These include color video, stereo audio, separate audio programming channels, slow data rate digital transfer (for closed captioning), and ghost canceling. The next major change to television technology will be its conversion to HDTV.

Figure 1 demonstrates the operation of the basic NTSC analog television system. The video source is broken into 30 frames per second and converted into multiple lines per frame. Each video line transmission begins with a burst pulse (called a sync pulse) that is followed by a signal that represents color and intensity. The time relative to the starting sync is the position on the line from left to right. Each line is sent until a frame is complete and the next frame can begin. The television receiver decodes the video signal to position and control the intensity of an electronic beam that scans the phosphorus tube (“picture tube”) to recreate the display.

Analog Video

Analog video contains a rapidly changing signal (analog) that represents cthe luminance and color information of a video picture. Sending a video picture involves the creation and transfer of a sequence of individual still pictures called frames. Each frame is divided into horizontal and vertical lines. To create a single frame picture on a television set, the frame is drawn line by line. The process of drawing these lines on the screen is called scanning. The frames are drawn to the screen in two separate scans. The first scan draws half of the picture and the second scan draws between the lines of the first scan. This scanning method is called interlacing. Each line is divided into pixels that are the smallest possible parts of the picture. The number of pixels that can be displayed determines the resolution (quality) of the video signal. The video signal breaks down the television picture into three parts: the picture brightness (luminance), the color (chrominance), and the audio.

There are three primary systems used for analog television broadcasting: NTSC, PAL, and SECAM. The National Television System Committee (NTSC) is used for the Americas, while PAL and SECAM are primarily used in the UK and other countries. The major difference between the analog television systems is the number of lines of resolution and the methods used for color transmission.

There have been enhancements made to analog video systems over the past 50 years. These include color video, stereo audio, separate audio programming channels, slow data rate digital transfer (for closed captioning), and ghost canceling. The next major change to television technology will be its conversion to HDTV.

Figure 1 demonstrates the operation of the basic NTSC analog television system. The video source is broken into 30 frames per second and converted into multiple lines per frame. Each video line transmission begins with a burst pulse (called a sync pulse) that is followed by a signal that represents color and intensity. The time relative to the starting sync is the position on the line from left to right. Each line is sent until a frame is complete and the next frame can begin. The television receiver decodes the video signal to position and control the intensity of an electronic beam that scans the phosphorus tube (“picture tube”) to recreate the display.

Simple Telecom Technologies

Telecommunication transmission technology is often lumped into two

categories: analog transmission or digital transmission. Figure 4.1 depicts a

basic telecommunications transmission system that transfers digital information

from one source to an information receiver. The information source is supplied

to an end-node that converts the information to a form that can be transmitted

through the transmission medium (air, copper, or fiber). The receiving end-node

converts the transmission signal into a form that is compatible for the

receiver of the information.

Analog

Analog transmission is a process of transferring signals between end-nodes than can have many different signal levels and frequencies. Because analog signals can continuously change to many different levels (voltages) at changing rates (frequencies), the transfer of analog signals (such as an audio signal) requires the transmission medium to have similar transfer characteristics to all parts (levels and frequencies) of the transmission signal. Analog transmission systems must be robust to transfer the signal unaltered for specific voltage levels and frequency components (e.g., high and low frequency).

Figure below shows an analog transmission system. This diagram shows that an audio acoustic (sound) signal is converted by a microphone to an audio electrical signal prior to transmission on a copper line. This audio electrical signal is amplified by an end-node to increase the signal level for transmission on a copper wire. This amplification is necessary to overcome the transmission loss of the copper wire. As the signal progresses down the copper wire, some of the signal energy is converted to heat reducing the signal level. Another amplifier (the receiving end-node) is located at the receiving end to increase the signal to a level suitable for the information receiver (audio speaker).

Digital

Digital transmission is the process of transferring information from node to node in a form that can only have specific levels (usually logic 1 and logic 0). Digital signals have a limited number of different levels (voltages) that represent digital information. Transferring digital signals (such as a computer’s data signal) only requires the transmission medium to transfer two levels without precisely (linearly) transferring levels in between the two levels.

Figure below shows a digital transmission system. This diagram shows a computer that is sending digital data (one equal to +5 volts and zero equal to 0 volts) to an end-node. The end-node is a channel service unit (CSU) and digital service unit (DSU) that converts the levels from the computer to levels suitable for the copper wire transmission medium (logic 1 = +5V and logic 0 = -5V). As the digital signal transfers down the copper wire, some of the energy is converted to heat and some of the frequency components are attenuated resulting in a slightly distorted (rounded) digital pulse arriving at the receiving end-node. Because the receiving CSU/DSU only needs to sense two levels, it is able to re-create the original undistorted digital signal (also known as digital signal regeneration).

Basic Transmission System

Analog

Analog transmission is a process of transferring signals between end-nodes than can have many different signal levels and frequencies. Because analog signals can continuously change to many different levels (voltages) at changing rates (frequencies), the transfer of analog signals (such as an audio signal) requires the transmission medium to have similar transfer characteristics to all parts (levels and frequencies) of the transmission signal. Analog transmission systems must be robust to transfer the signal unaltered for specific voltage levels and frequency components (e.g., high and low frequency).

Figure below shows an analog transmission system. This diagram shows that an audio acoustic (sound) signal is converted by a microphone to an audio electrical signal prior to transmission on a copper line. This audio electrical signal is amplified by an end-node to increase the signal level for transmission on a copper wire. This amplification is necessary to overcome the transmission loss of the copper wire. As the signal progresses down the copper wire, some of the signal energy is converted to heat reducing the signal level. Another amplifier (the receiving end-node) is located at the receiving end to increase the signal to a level suitable for the information receiver (audio speaker).

Analog Transmission System

Digital

Digital transmission is the process of transferring information from node to node in a form that can only have specific levels (usually logic 1 and logic 0). Digital signals have a limited number of different levels (voltages) that represent digital information. Transferring digital signals (such as a computer’s data signal) only requires the transmission medium to transfer two levels without precisely (linearly) transferring levels in between the two levels.

Figure below shows a digital transmission system. This diagram shows a computer that is sending digital data (one equal to +5 volts and zero equal to 0 volts) to an end-node. The end-node is a channel service unit (CSU) and digital service unit (DSU) that converts the levels from the computer to levels suitable for the copper wire transmission medium (logic 1 = +5V and logic 0 = -5V). As the digital signal transfers down the copper wire, some of the energy is converted to heat and some of the frequency components are attenuated resulting in a slightly distorted (rounded) digital pulse arriving at the receiving end-node. Because the receiving CSU/DSU only needs to sense two levels, it is able to re-create the original undistorted digital signal (also known as digital signal regeneration).

Digital

Transmission System

Analog Signal Processing

Analog signals (continuously varying signals) may be processed by

filters, shaping circuits, combiners, and amplifiers to change their shape and

modify their content.

Signal Filtering

Filters may remove (band-reject) or allow (band-pass) portions of analog (possibly audio signals) that contain a range of high and low frequencies that are not necessary to transmit. In some cases, additional signals (at different frequencies) may be combined with audio signals prior to transmission. These signals may be multiple channels or may be signals that are used for control purposes. If control signals are added to an analog signal that is transmitted, they are usually removed from the audio signal in the receiver by filtering.

Figure below shows typical audio signal processing for a communications transmitter. In this example, the audio signal is processed through a filter to remove very high and very low frequency parts (audio band-pass filter). These unwanted frequency parts are possibly noise and other out of audio frequency signals that could distort the desired signal. The high frequencies can be seen as rapid changes in the audio signal. After an audio signal is processed by the audio band-pass filter, the sharp edges of the audio signal (high frequency components) are removed.

Signal Amplification

Signal amplification is a process of sensing an input (usually low level) signal and converting the signal into a larger version of itself. An amplifier device provides this conversion process. Amplifiers increase both the desired signal and unwanted noise signals. Noise signals are any random disturbance or unwanted signal in a communication system that tends to obscure the clarity of a signal in relation to its intended use.

Figure below shows how a signal may be amplified. This diagram shows that the input signal is increased in value by an amplifier that can vary its gain (amount of amplification).

Signal Amplification

Signal Shaping

Audio signals may be processed by shaping circuits to add or remove emphasis of frequency (tone) or intensity (volume). When the signal processing involves differences of amplification of specific frequency components of an input signal, it is called pre-emphasis and de-emphasis. Signal processing that involves relative changes in the amount of amplification dependent on the level of input signal, it is called companding and expanding.

Some analog transmission systems use pre-emphasis circuits to amplify the high frequency components of the audio input signal which allow the modulation system to be more effective. Certain modulation systems do not respond well to low amplitudes of high frequency input signals. By boosting the high frequency component of the input audio signal, the modulator better translates the input signal into a modulated carrier signal. When pre-emphasis is used for transmission, a matched de-emphasis system is used in the receiver to convert the boosted high frequency component back into its original low signal level.

The intensity of an audio signal can vary dramatically because some people talk loudly and others talk softly. A system that reduces the amount of amplification (gain) of an audio signal for larger input signals (e.g., louder talker) is called companding. The use of companding allows the level of audio signal that enters the modulator to have a smaller overall range (higher minimum and lower maximum). High signals and low signals input to a modulator may have a different conversion level (ratio of modulation compared to input signal level). This can create distortion so companding allows the modulator to convert the information signal (audio signal) with less distortion. Of course, the process of companding must be reversed at the receiving end, called expanding, to recreate the original audio signal.

Figure below shows the basic signal companding and expanding process. This diagram shows that the amount of amplifier gain is reduced as the level of input signal is increased. This keeps the input level to the modulator to a relatively small dynamic range. At the receiving end of the system, an expanding system is used to provide additional amplification to the upper end of the output signal. This recreates the shape of the original input audio signal.

Analog Signal Companding and Expanding

Signal Filtering

Filters may remove (band-reject) or allow (band-pass) portions of analog (possibly audio signals) that contain a range of high and low frequencies that are not necessary to transmit. In some cases, additional signals (at different frequencies) may be combined with audio signals prior to transmission. These signals may be multiple channels or may be signals that are used for control purposes. If control signals are added to an analog signal that is transmitted, they are usually removed from the audio signal in the receiver by filtering.

Figure below shows typical audio signal processing for a communications transmitter. In this example, the audio signal is processed through a filter to remove very high and very low frequency parts (audio band-pass filter). These unwanted frequency parts are possibly noise and other out of audio frequency signals that could distort the desired signal. The high frequencies can be seen as rapid changes in the audio signal. After an audio signal is processed by the audio band-pass filter, the sharp edges of the audio signal (high frequency components) are removed.

Audio Signal Filtering

Signal Amplification

Signal amplification is a process of sensing an input (usually low level) signal and converting the signal into a larger version of itself. An amplifier device provides this conversion process. Amplifiers increase both the desired signal and unwanted noise signals. Noise signals are any random disturbance or unwanted signal in a communication system that tends to obscure the clarity of a signal in relation to its intended use.

Figure below shows how a signal may be amplified. This diagram shows that the input signal is increased in value by an amplifier that can vary its gain (amount of amplification).

Signal Shaping

Audio signals may be processed by shaping circuits to add or remove emphasis of frequency (tone) or intensity (volume). When the signal processing involves differences of amplification of specific frequency components of an input signal, it is called pre-emphasis and de-emphasis. Signal processing that involves relative changes in the amount of amplification dependent on the level of input signal, it is called companding and expanding.

Some analog transmission systems use pre-emphasis circuits to amplify the high frequency components of the audio input signal which allow the modulation system to be more effective. Certain modulation systems do not respond well to low amplitudes of high frequency input signals. By boosting the high frequency component of the input audio signal, the modulator better translates the input signal into a modulated carrier signal. When pre-emphasis is used for transmission, a matched de-emphasis system is used in the receiver to convert the boosted high frequency component back into its original low signal level.

The intensity of an audio signal can vary dramatically because some people talk loudly and others talk softly. A system that reduces the amount of amplification (gain) of an audio signal for larger input signals (e.g., louder talker) is called companding. The use of companding allows the level of audio signal that enters the modulator to have a smaller overall range (higher minimum and lower maximum). High signals and low signals input to a modulator may have a different conversion level (ratio of modulation compared to input signal level). This can create distortion so companding allows the modulator to convert the information signal (audio signal) with less distortion. Of course, the process of companding must be reversed at the receiving end, called expanding, to recreate the original audio signal.

Figure below shows the basic signal companding and expanding process. This diagram shows that the amount of amplifier gain is reduced as the level of input signal is increased. This keeps the input level to the modulator to a relatively small dynamic range. At the receiving end of the system, an expanding system is used to provide additional amplification to the upper end of the output signal. This recreates the shape of the original input audio signal.

Analog Signal Companding and Expanding

Digital Signal Processing

Digital signals processing refers to a category of electronic devices

that represent and process information that are in discrete signal level

(digital) formats. Digital signal processing refers to the manipulation of

digital signals to change their content and to add error detection and

correction capability.

Digital signals typically vary in two levels; on (logic 1) and off (logic 0). A bit is the smallest part of a digital signal, typically called a data bit. A bit typically can assume two levels: either a zero (0) or a one (1). A byte is an agreed-upon group of bits, typically eight. A byte typically represents one alphabetic or special character, two decimal digits, or eight binary bits of information.

When analog signals are converted to digital format, the digital signals represent the original analog waveform. Just like analog signals that may be processed by filters, shaping circuits, combiners and amplifiers, digital signals can be processed to produce similar functions. However, because the signal is in digital form, these functions are performed by software programs that manipulate the data.

Unlike analog signals, digital signals can be recreated to their original form. This process is called signal regeneration. To increase the efficiency of a transmission signal (allow more users per channel), digital signals can be analyzed for redundancy and the digital signal data can be compressed. Digital signals can also be processed in a way that helps overcome the effects signal distortion that can result in the incorrect determination of a digital signal (whether a zero or one had been sent). This is called error detection and error correction processing. When digital signals represent the original analog signal, advanced echo canceling software programs can be used to reduce the effects of echoes that are caused by feedback in the audio and transmission system. Some systems use dedicated digital signal processors (DSPs) to manipulate the incoming digital information via a program (stored instructions) that produce a new digital output. This allows software programs to perform many functions (such as signal filtering) that previously required complex dedicated electronic circuits.

Digitization of an Analog Signal

Analog signals must be converted to digital form for use in a digital wireless system. To convert analog signals to digital form, the analog signal is digitized by using an analog-to-digital (pronounced A to D) converter. The A/D converter periodically senses (samples) the level of the analog signal and creates a binary number or series of digital pulses that represent the level of the signal.

The common conversion process is Pulse Code Modulation (PCM). For most PCM systems, the typical analog sampling rate occurs at 8000 times a second. Each sample produces 8 bits digital that results in a digital data rate (bit stream) of 64 thousand bits per second (kbps).

Figure below shows how an analog signal is converted to a digital signal. This diagram shows that an acoustic (sound) signal is converted to an audio electrical signal (continuously varying signal) by a microphone. This signal is sent through an audio band-pass filter that only allows frequency ranges within the desired audio band (removes unwanted noise and other non-audio frequency components). The audio signal is then sampled every 125 microseconds (8,000 times per second) and converted into 8 digital bits. The digital bits represent the amplitude of the input analog signal.

Digital signals typically vary in two levels; on (logic 1) and off (logic 0). A bit is the smallest part of a digital signal, typically called a data bit. A bit typically can assume two levels: either a zero (0) or a one (1). A byte is an agreed-upon group of bits, typically eight. A byte typically represents one alphabetic or special character, two decimal digits, or eight binary bits of information.

When analog signals are converted to digital format, the digital signals represent the original analog waveform. Just like analog signals that may be processed by filters, shaping circuits, combiners and amplifiers, digital signals can be processed to produce similar functions. However, because the signal is in digital form, these functions are performed by software programs that manipulate the data.

Unlike analog signals, digital signals can be recreated to their original form. This process is called signal regeneration. To increase the efficiency of a transmission signal (allow more users per channel), digital signals can be analyzed for redundancy and the digital signal data can be compressed. Digital signals can also be processed in a way that helps overcome the effects signal distortion that can result in the incorrect determination of a digital signal (whether a zero or one had been sent). This is called error detection and error correction processing. When digital signals represent the original analog signal, advanced echo canceling software programs can be used to reduce the effects of echoes that are caused by feedback in the audio and transmission system. Some systems use dedicated digital signal processors (DSPs) to manipulate the incoming digital information via a program (stored instructions) that produce a new digital output. This allows software programs to perform many functions (such as signal filtering) that previously required complex dedicated electronic circuits.

Digitization of an Analog Signal

Analog signals must be converted to digital form for use in a digital wireless system. To convert analog signals to digital form, the analog signal is digitized by using an analog-to-digital (pronounced A to D) converter. The A/D converter periodically senses (samples) the level of the analog signal and creates a binary number or series of digital pulses that represent the level of the signal.

The common conversion process is Pulse Code Modulation (PCM). For most PCM systems, the typical analog sampling rate occurs at 8000 times a second. Each sample produces 8 bits digital that results in a digital data rate (bit stream) of 64 thousand bits per second (kbps).

Figure below shows how an analog signal is converted to a digital signal. This diagram shows that an acoustic (sound) signal is converted to an audio electrical signal (continuously varying signal) by a microphone. This signal is sent through an audio band-pass filter that only allows frequency ranges within the desired audio band (removes unwanted noise and other non-audio frequency components). The audio signal is then sampled every 125 microseconds (8,000 times per second) and converted into 8 digital bits. The digital bits represent the amplitude of the input analog signal.

Signal Digitization

Digital bytes of information are converted to specific voltage levels based on the value (weighting) of the binary bit position. In the binary system, the value of the next sequential bit is 2 times larger. For PCM systems that are used for telephone audio signals, the weighting of bits within a byte of information (8 bits) is different than the binary system. The companding process increases the dynamic range of a digital signal that represents an analog signal; smaller bits are given larger values that than their binary equivalent. This skewing of weighing value give better dynamic range. This companding process increases the dynamic range of a binary signal by assigning different weighted values to each bit of information than is defined by the binary system.

Two common encoding laws are Mu-Law and A-Law encoding. Mu-Law encoding is primarily used in the Americas and A-Law encoding is used in the rest of the world. When different types of encoding systems are used, a converter is used to translate the different coding levels.

Digital Signal Regeneration

To overcome the effects of noise on transmitted signals, digital transmission systems use digital signal regeneration to restore the quality of the signal as it moves through a network. Digital signal regeneration is the process of reception and restoration of a digital pulse or lightwave signal to its original form after its amplitude, waveform, or timing have been degraded by normal factors during transmission. The resultant signal is virtually free of noise or distortion.

Figure below shows the process of digital signal regeneration. This example shows an original digital signal (a) and added noise (b) to produce a combined digital signal with noise (c). The regeneration process detects maximum and minimum expected values (threshold points) and recreates the original digital signal (d).

Data Compression

To increase the amount of information that a transmission system can transfer, digital systems may use data compression. Data compression is a processing technique for encoding information so that fewer data bits of information are required to represent a given amount of data. Compression allows the transmission of more data over a given amount of time and circuit capacity. It also reduces the amount of memory required for data storage.

Digital compression analyzes a digital signal for either redundant information (repeated 1’s or 0’s) or may analyze the information content of the digital signal into component parts (such as speech patterns or video frames). All of this processing allows the data transmission rate to be reduced by sending only the characteristics of the signal rather than the complete digital signal. Some data compression systems can only reduce data rates by a factor of 2:1 (e.g., ADPCM audio compression) while advanced digital audio compression can only reduce data rates by a factor of approximately 200:1 (e.g., MPEG video compression). When used in combination of data compression and decompression, the device is called a COder/DECoder (CoDec).

When a digital signal is compressed for voice communications, it is called a voice coder (Vo-coder) or speech coder. The Vo-coder is a digital signal processing device that analyzes speech signals so that it can produce a lower data rate compressed digital signal. The difference between standard data compression and voice data compression is the analysis of the information source (speech) and elimination of compression process for non-voice signals. Speech coding usually involves the use of data tables (called code books) that represent information parts that can be associated with human sound. Because non-human sounds can be eliminated from the code book, this allows the number of bits can be used to create a compressed digital voice signal to be reduced.

Figure below shows the digital voice compression process. In this example, a digital signal is continuously applied to a digital signal analysis device. The analysis portion of the speech coder extracts the amplitude, pitch, and other key parameters of the signal and then looks up related values in the code book for the portion of sound it has analyzed. Only key parameters and code book values are transmitted. This results in data compression ratios of 4:1 to over 16:1.

Digital Voice Compression

Error Detection and Error Correction

To help reduce the effects of errors on data transmission, error detection, and error protection systems are used in most communication systems. Error detection systems use a process of adding some data bits to the transmitted data signal that are used to help determine if bits were received in error due to distorted transmission. Error correction is made possible by sending bits that have a relationship to the data that is contained in the desired data block or message. These related bits permit a receiver of information to use these extra information bits to detect and/or correct for errors that may have occurred during data transmission.

A common measurement of the performance of a communication system is the amount of bits received in error, called the bit error rate (BER). The BER is the ratio of bits received in error compared to the total number of bits received.

Error detection processing involves the creation of additional bits that are sent with the original data. The additional check bits are created by using a formula calculation on the digital signal prior to sending the data. After the digital signal is received, the formula can be used again to create check bits from the received digital signal. If the check bits match, the original digital signal was received correctly. If the check bits do not match, some (or all) of the digital signal was received in error. This process is called error detection.

Some digital systems use sophisticated mathematical formulas to create the check bits so that the check bits can be used to make corrections (or predictions of the correct bits) to the received digital signal. This process is called error correction.

Figure below shows the basic error detection and correction process. This diagram shows that a sequence of digital bits is supplied to a computing device that produces a check bit sequence. The check bit sequence is sent in addition to the original digital bits. When the check bits are received, the same formula is used to check to see if any of the bits received were in error.

Error Detection and Correction

Echo Cancellation

Echo cancellation is a process of extracting a delayed version of an original transmitted signal (audio echo) from the received signal. Echoes may be created through acoustic feedback where some of the audio signal transferring from a speaker into a microphone.

Echoed signals cause distortion and may be removed by performing via advanced signal analysis and filtering. Figure 3.18 shows an example of the echo cancellation process. This diagram shows how the combining of two signals, the original plus a delayed version of the original produces a complex signal. The echo canceling system analyzes the complex signal and uses the signal analysis to create variations of the likely echo signal. This prediction of echoed signal is subtracted from the complex signal to reproduce the original signal without the echo.

Echo Cancellation

Echoed signals can also occur in signals other than audio signals. When echoes occur on radio channels (the broadband signal), it is usually the result of the same signal that travels on different paths to reach its destination. This is called multipath propagation. Echo canceling can be used to reduce the effects of radio multipath propagation.

Digital Signal Processor (DSP)

A digital signal processor (DSP) is an electronics device or assembly (typically an integrated circuit) that is designed to process signals through the use of embedded microprocessor instructions. The use of DSPs in communication circuits allows manufacturers to quickly and reliably develop advanced communications systems through the use of software programs. The software programs (often called modules) perform advanced signal processing functions that previously complex dedicated electronics circuits. Although manufacturers may develop their own software modules, DSP software modules are often developed by other companies that specialize in specific types of communication technologies. For example, a manufacturer may purchase a software module for echo canceling from one DSP software module developer and a modulator software module from a different DSP software module developer. Because DSPs use these software modules, if new technologies such as speech compression, channel coding, or modulation techniques are developed, the manufacturer only has to change the software programs in the DSP to utilize the new technology.

Figure below shows typical digital signal processor that is used in a digital communication system. This diagram shows that a DSP contains a signal input and output lines, a microprocessor assembly, interrupt lines from assemblies that may require processing, and software program instructions. This diagram shows that this DSP has 3 software programs, digital signal compression, channel coding, and modulation coding. The digital signal compression software analyzes the digital audio signal and compresses the information to a lower data transmission rate. The channel coding adds control signals and error protection bits. The modulation coding formats (shapes) the output signal so it can be directly applied to an RF modulator assembly. This diagram also shows that an optional interface is included to allow updating of the software programs that are stored in the DSP.

Digital Signal Processor (DSP)

Basic Communication Technologies: Signal Types

Signal Types

There are two basic types of signals: analog and digital. Many communication systems receive analog signals (e.g., audio signals), convert them to a digital format, transport the digital signals through a network, and reconvert the digital signals back to their analog form when they reach their destination.

Analog

An analog signal can vary continuously between a maximum and minimum value and it can assume an infinite number of values between the two extremes.

Figure below shows a sample analog signal created by sound. In this example, as the sound pressure from a person’s voice is detected by a microphone, it is converted to its equivalent electrical signal. Also, the analog audio signal continuously varies in amplitude (height, loudness, or energy) as time progresses.

Digital

Digital signals have a limited number of discrete states, usually two, in contrast to analog signals that vary continuously and have an infinite number of states. Digital signals transfer discrete signal levels at predetermined time intervals. Digital signals typically have two levels: on (logic 1) and off (logic 0). The information contained in a single time period is called a bit. The number of bits that are transferred in one second is called the data transfer rate or bits per second (bps). Because many bits are typically transferred in 1 second, the data rate is typically preceded by a multiplier k (thousand) or M (million). For example, if the data transfer rate is 3 million bits per second, 3 Mbps would indicate this. Bits are typically combined into groups of 8 bits to form a byte. When the reference is made to bytes instead of bits, the b is capitalized. For example, 10 thousand bytes is represented by kB. Figure below shows a sample digital signal. In this example, the bits 01011010 are transferred in 1 second. This results in a bit rate of 8 bps.

The earliest form of digital radio communication was Morse Code. To send Morse Code, the radio transmitter was simply turned on and off to form dots and dashes. The receiver would sense (detect) the radio carrier to reproduce the dots and dashes. A code book of dots and dashes was used to decode the message into symbols or letters. The on and off pulses or bits that comprise a modern digital signal is sent in a similar way.

The trend in communication systems, just as in other types of electronics products such as compact discs, is to change from analog systems to digital systems. Digital systems have a number of important advantages including the fact that digital signals are more immune to noise. Unlike analog systems, even when noise has been introduced, any resulting errors in the digital bit stream can be detected and corrected. Also, digital signals can be easily manipulated or processed in useful ways using modern computer techniques.

Video Communications

Video communication is

the transmission and reception of video (multiple images) using electrical or

optical transmission signals. Telecommunications systems can transfer video

signals in analog or digital form.

Analog Video

Analog video is the representation of a series of multiple images (video) through the use of rapidly changing signals (analog). This analog signal indicates the position, luminance, and color information within the video signal.

Sending a video picture involves the creation and transfer of a sequence of individual still pictures called frames. Each frame is divided into horizontal and vertical lines. To create a single frame picture on a television set, the frame is drawn line by line. The process of drawing these lines on the screen is called scanning. The frames are drawn to the screen in two separate scans. The first scan draws half of the picture and the second scan draws between the lines of the first scan. This scanning method is called interlacing. Each line is divided into pixels that are the smallest possible parts of the picture. The number of pixels that can be displayed determines the resolution (quality) of the video signal. The video signal television picture into three parts: the picture brightness (luminance), the color (chrominance), and the audio.

Digital Video

Digital video is a sequence of picture signals (frames) that are represented by binary data (bits) that describe a finite set of color and luminance levels. Sending a digital video picture involves the conversion of a scanned image to digital information that is transferred to a digital video receiver. The digital information contains characteristics of the video signal and the position of the image (bit location) that will be displayed.

Analog Video

Analog video is the representation of a series of multiple images (video) through the use of rapidly changing signals (analog). This analog signal indicates the position, luminance, and color information within the video signal.

Sending a video picture involves the creation and transfer of a sequence of individual still pictures called frames. Each frame is divided into horizontal and vertical lines. To create a single frame picture on a television set, the frame is drawn line by line. The process of drawing these lines on the screen is called scanning. The frames are drawn to the screen in two separate scans. The first scan draws half of the picture and the second scan draws between the lines of the first scan. This scanning method is called interlacing. Each line is divided into pixels that are the smallest possible parts of the picture. The number of pixels that can be displayed determines the resolution (quality) of the video signal. The video signal television picture into three parts: the picture brightness (luminance), the color (chrominance), and the audio.

Digital Video

Digital video is a sequence of picture signals (frames) that are represented by binary data (bits) that describe a finite set of color and luminance levels. Sending a digital video picture involves the conversion of a scanned image to digital information that is transferred to a digital video receiver. The digital information contains characteristics of the video signal and the position of the image (bit location) that will be displayed.

Analog Versus Digital

Comparison chart

|

Analog

|

Digital

|

|

|

Technology:

|

Analog

technology records waveforms as they are.

|

Converts

analog waveforms into set of numbers

and records them. The numbers are converted into voltage stream for

representation.

|

|

Uses:

|

Can

be used in various computing platforms and under operating systems like

Linux, Unix, Mac OS and Windows

|

Computing

and electronics

|

|

Signal:

|

Analog

signal is a continuous signal which transmits information as a response to

changes in physical phenomenon

|

Digital

signals are discrete time signals generated by digital modulation

|

|

Representation:

|

Uses

continuous range of values to represent information

|

Uses

discrete or discontinuous values to represent information

|

|

Applications:

|

Thermometer

|

PCs,

PDAs

|

|

Data

transmissions:

|

Not

of high

quality

|

High

quality

|

|

Response

to Noise:

|

More

likely to get affected reducing accuracy

|

Less

affected since noise response are analog in nature

|

|

Waves:

|

Denoted

by sine waves

|

Denoted

by square waves

|

|

Example:

|

Human

voice in air

|

Electronic

devices

|

Summary:

Analog signals can be converted into

digital signals by using a modem.

Digital signals use binary values to send and receive data between

computers.

Digital signals are easier and more

reliable to transmit with fewer errors.

Analog signal are replicas of sound

waves that can be distorted with noise and drop the quality of transmission.

Digital data has a faster rate of

transmission when compared to analog, and gives better productivity.

Subscribe to:

Comments (Atom)